-

MIT researchers advance automated interpretability in AI models

As artificial intelligence models become increasingly prevalent and are integrated into diverse sectors like health care, finance, education, transportation, and entertainment, understanding how they work under the hood is critical. Interpreting the mechanisms underlying AI models enables us to audit them for safety and biases, with the potential to deepen our understanding of the science…

-

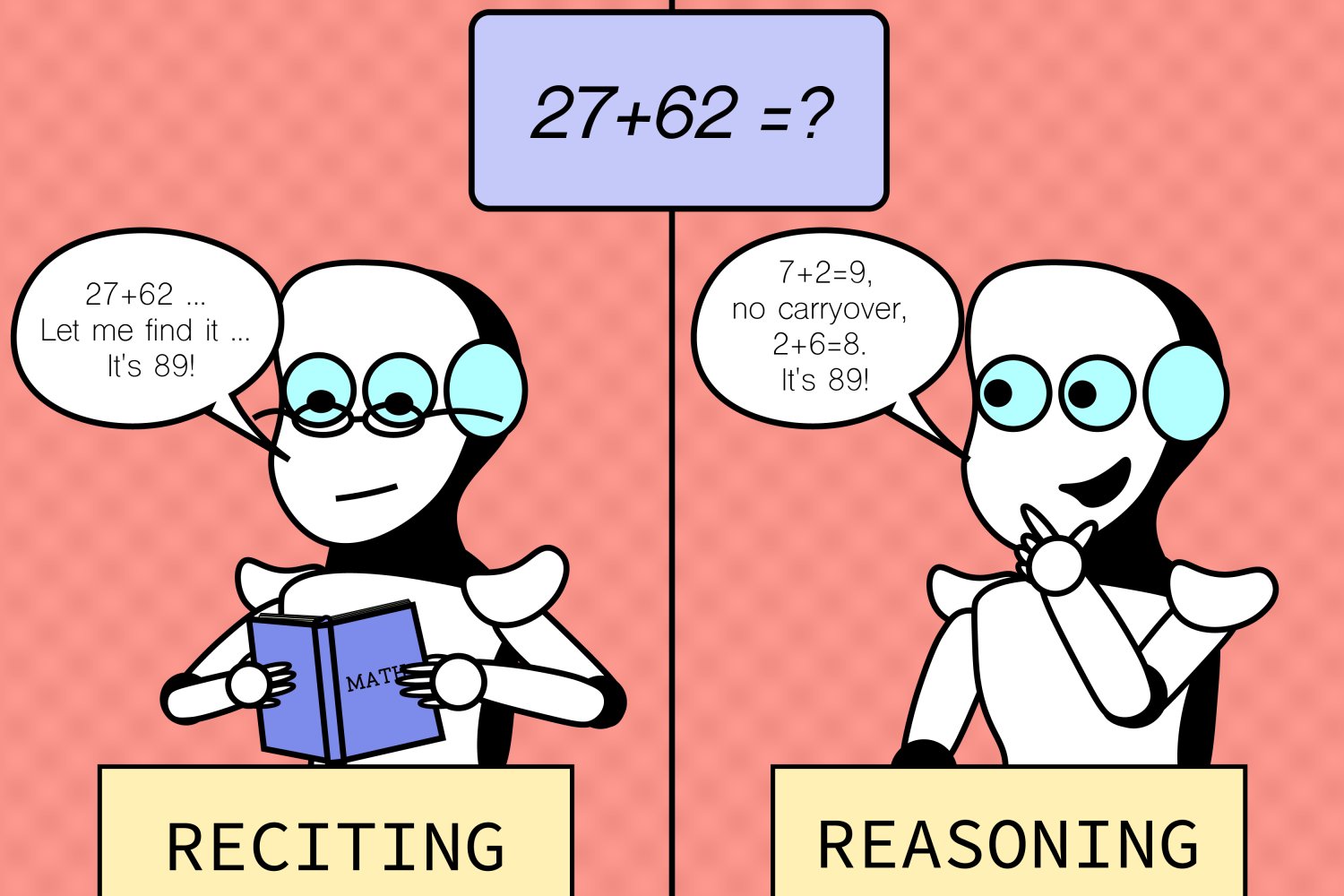

Reasoning skills of large language models are often overestimated

When it comes to artificial intelligence, appearances can be deceiving. The mystery surrounding the inner workings of large language models (LLMs) stems from their vast size, complex training methods, hard-to-predict behaviors, and elusive interpretability. MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) researchers recently peered into the proverbial magnifying glass to examine how LLMs fare…

-

To build a better AI helper, start by modeling the irrational behavior of humans

To build AI systems that can collaborate effectively with humans, it helps to have a good model of human behavior to start with. But humans tend to behave suboptimally when making decisions. This irrationality, which is especially difficult to model, often boils down to computational constraints. A human can’t spend decades thinking about the ideal…

-

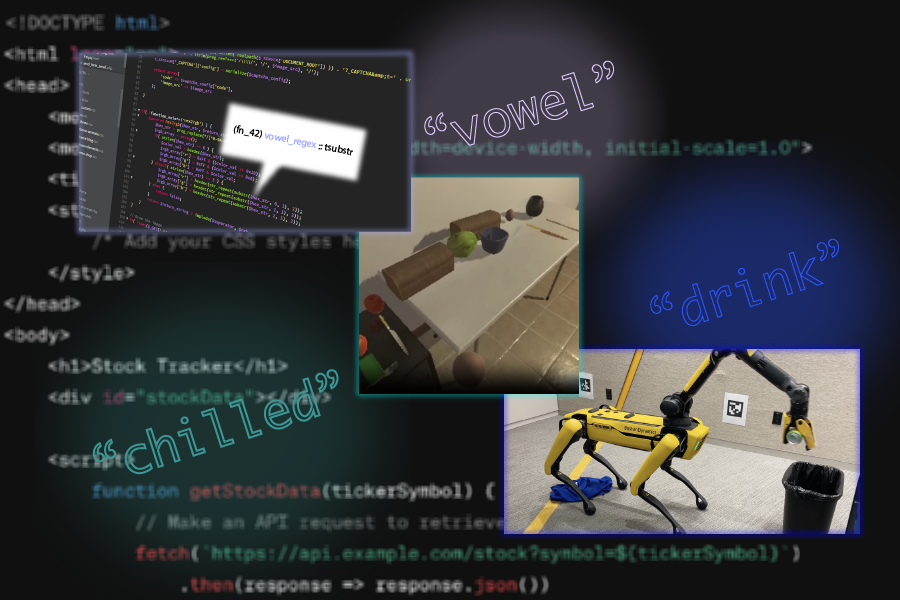

Large language models use a surprisingly simple mechanism to retrieve some stored knowledge

Large language models, such as those that power popular artificial intelligence chatbots like ChatGPT, are incredibly complex. Even though these models are being used as tools in many areas, such as customer support, code generation, and language translation, scientists still don’t fully grasp how they work. In an effort to better understand what is going…

-

Reasoning and reliability in AI

In order for natural language to be an effective form of communication, the parties involved need to be able to understand words and their context, assume that the content is largely shared in good faith and is trustworthy, reason about the information being shared, and then apply it to real-world scenarios. MIT PhD students interning with…

-

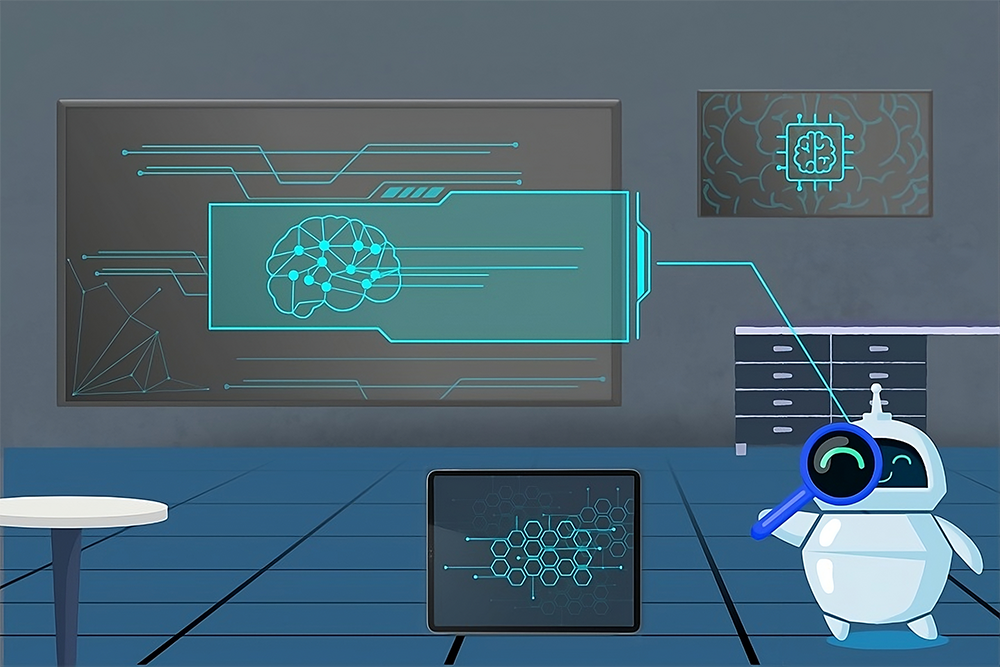

AI agents help explain other AI systems

Explaining the behavior of trained neural networks remains a compelling puzzle, especially as these models grow in size and sophistication. Like other scientific challenges throughout history, reverse-engineering how artificial intelligence systems work requires a substantial amount of experimentation: making hypotheses, intervening on behavior, and even dissecting large networks to examine individual neurons. To date, most…

-

Artificial intelligence for augmentation and productivity

The MIT Stephen A. Schwarzman College of Computing has awarded seed grants to seven projects that are exploring how artificial intelligence and human-computer interaction can be leveraged to enhance modern work spaces to achieve better management and higher productivity. Funded by Andrew W. Houston ’05 and Dropbox Inc., the projects are intended to be interdisciplinary…